OpenClaw Integrates VirusTotal Scanning to Detect Malicious ClawHub Skills

OpenClaw (formerly Moltbot and Clawdbot) has announced that it’s partnering with Google-owned VirusTotal to scan skills that are being

OpenClaw (formerly Moltbot and Clawdbot) has announced that it’s partnering with Google-owned VirusTotal to scan skills that are being uploaded to ClawHub, its skill marketplace, as part of broader efforts to bolster the security of the agentic ecosystem.

“All skills published to ClawHub are now scanned using VirusTotal’s threat intelligence, including their new Code Insight capability,” OpenClaw’s founder Peter Steinberger, along with Jamieson O’Reilly and Bernardo Quintero said. “This provides an additional layer of security for the OpenClaw community.”

The process essentially entails creating a unique SHA-256 hash for every skill and cross checking it against VirusTotal’s database for a match. If it’s not found, the skill bundle is uploaded to the malware scanning tool for further analysis using VirusTotal Code Insight.

Skills that have a “benign” Code Insight verdict are automatically approved by ClawHub, while those marked suspicious are flagged with a warning. Any skill that’s deemed malicious is blocked from download. OpenClaw also said all active skills are re-scanned on a daily basis to detect scenarios where a previously clean skill becomes malicious.

That said, OpenClaw maintainers also cautioned that VirusTotal scanning is “not a silver bullet” and that there is a possibility that some malicious skills that use a cleverly concealed prompt injection payload may slip through the cracks.

In addition to the VirusTotal partnership, the platform is expected to publish a comprehensive threat model, public security roadmap, formal security reporting process, as well as details about the security audit of its entire codebase.

The development comes in the aftermath of reports that found hundreds of malicious skills on ClawHub, prompting OpenClaw to add a reporting option that allows signed-in users to flag a suspicious skill. Multiple analyses have uncovered that these skills masquerade as legitimate tools, but, under the hood, they harbor malicious functionality to exfiltrate data, inject backdoors for remote access, or install stealer malware.

“AI agents with system access can become covert data-leak channels that bypass traditional data loss prevention, proxies, and endpoint monitoring,” Cisco noted last week. “Second, models can also become an execution orchestrator, wherein the prompt itself becomes the instruction and is difficult to catch using traditional security tooling.”

The recent viral popularity of OpenClaw, the open-source agentic artificial intelligence (AI) assistant, and Moltbook, an adjacent social network where autonomous AI agents built atop OpenClaw interact with each other in a Reddit-style platform, has raised security concerns.

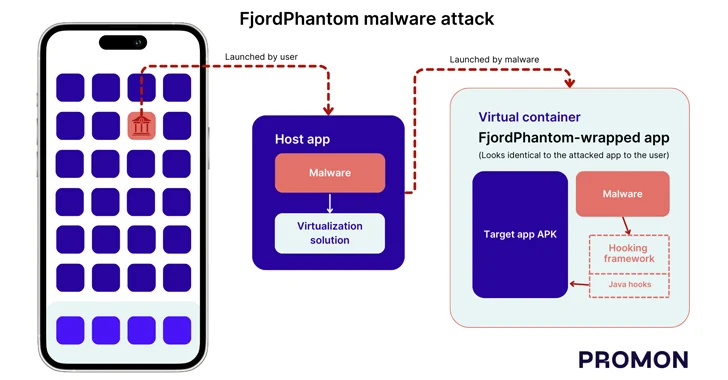

While OpenClaw functions as an automation engine to trigger workflows, interact with online services, and operate across devices, the entrenched access given to skills, coupled with the fact that they can process data from untrusted sources, can open the door to risks like malware and prompt injection.

In other words, the integrations, while convenient, significantly broaden the attack surface and expand the set of untrusted inputs the agent consumes, turning it into an “agentic trojan horse” for data exfiltration and other malicious actions. Backslash Security has described OpenClaw as an “AI With Hands.”

“Unlike traditional software that does exactly what code tells it to do, AI agents interpret natural language and make decisions about actions,” OpenClaw noted. “They blur the boundary between user intent and machine execution. They can be manipulated through language itself.”

OpenClaw also acknowledged that the power wielded by skills – which are used to extend the capabilities of an AI agent, such as controlling smart home devices to managing finances – can be abused by bad actors, who can leverage the agent’s access to tools and data to exfiltrate sensitive information, execute unauthorized commands, send messages on the victim’s behalf, and even download and run additional payloads without their knowledge or consent.

What’s more, with OpenClaw being increasingly deployed on employee endpoints without formal IT or security approval, the elevated privileges of these agents can further enable shell access, data movement, and network connectivity outside standard security controls, creating a new class of Shadow AI risk for enterprises.

“OpenClaw and tools like it will show up in your organization whether you approve them or not,” Astrix Security researcher Tomer Yahalom said. “Employees will install them because they’re genuinely useful. The only question is whether you’ll know about it.”

Some of the glaring security issues that have come to the fore in recent days are below –

- A now-fixed issue identified in earlier versions that could cause proxied traffic to be misclassified as local, bypassing authentication for some internet-exposed instances.

- “OpenClaw stores credentials in cleartext, uses insecure coding patterns including direct eval with user input, and has no privacy policy or clear accountability,” OX Security’s Moshe Siman Tov Bustan and Nir Zadok said. “Common uninstall methods leave sensitive data behind – and fully revoking access is far harder than most users realize.”

- A zero-click attack that abuses OpenClaw’s integrations to plant a backdoor on a victim’s endpoint for persistent control when a seemingly harmless document is processed by the AI agent, resulting in the execution of an indirect prompt injection payload that allows it to respond to messages from an attacker-controlled Telegram bot.

- An indirect prompt injection embedded in a web page, which, when parsed as part of an innocuous prompt asking the large language model (LLM) to summarize the page’s contents, causes OpenClaw to append an attacker-controlled set of instructions to the ~/.openclaw/workspace/HEARTBEAT.md file and silently await further commands from an external server.

- A security analysis of 3,984 skills on the ClawHub marketplace has found that 283 skills, about 7.1% of the entire registry, contain critical security flaws that expose sensitive credentials in plaintext through the LLM’s context window and output logs.

- A report from Bitdefender has revealed that malicious skills are often cloned and re-published at scale using small name variations, and that payloads are staged through paste services such as glot.io and public GitHub repositories.

- A now-patched one-click remote code execution vulnerability affecting OpenClaw that could have allowed an attacker to trick a user into visiting a malicious web page that could cause the Gateway Control UI to leak the OpenClaw authentication token over a WebSocket channel and subsequently use it to execute arbitrary commands on the host.

- OpenClaw’s gateway binds to 0.0.0.0:18789 by default, exposing the full API to any network interface. Per data from Censys, there are over 30,000 exposed instances accessible over the internet as of February 8, 2026, although most require a token value in order to view and interact with them.

- In a hypothetical attack scenario, a prompt injection payload embedded within a specifically crafted WhatsApp message can be used to exfiltrate “.env” and “creds.json” files, which store credentials, API keys, and session tokens for connected messaging platforms from an exposed OpenClaw instance.

- An misconfigured Supabase database belonging to Moltbook that was left exposed in client-side JavaScript, making secret API keys of every agent registered on the site freely accessible, and allowing full read and write access to platform data. According to Wiz, the exposure included 1.5 million API authentication tokens, 35,000 email addresses, and private messages between agents.

- Threat actors have been found exploiting Moltbook’s platform mechanics to amplify reach and funnel other agents toward malicious threads that contain prompt injections to manipulate their behavior and extract sensitive data or steal cryptocurrency.

- “Moltbook may have inadvertently also created a laboratory in which agents, which can be high-value targets, are constantly processing and engaging with untrusted data, and in which guardrails aren’t set into the platform – all by design,” Zenity Labs said.

“The first, and perhaps most egregious, issue is that OpenClaw relies on the configured language model for many security-critical decisions,” HiddenLayer researchers Conor McCauley, Kasimir Schulz, Ryan Tracey, and Jason Martin noted. “Unless the user proactively enables OpenClaw’s Docker-based tool sandboxing feature, full system-wide access remains the default.”

Among other architectural and design problems identified by the AI security company are OpenClaw’s failure to filter out untrusted content containing control sequences, ineffective guardrails against indirect prompt injections, modifiable memories and system prompts that persist into future chat sessions, plaintext storage of API keys and session tokens, and no explicit user approval before executing tool calls.

In a report published last week, Persmiso Security argued that the security of the OpenClaw ecosystem is much more crucial than app stores and browser extension marketplaces owing to the agents’ extensive access to user data.

“AI agents get credentials to your entire digital life,” security researcher Ian Ahl pointed out. “And unlike browser extensions that run in a sandbox with some level of isolation, these agents operate with the full privileges you grant them.”

“The skills marketplace compounds this. When you install a malicious browser extension, you’re compromising one system. When you install a malicious agent skill, you’re potentially compromising every system that agent has credentials for.”

The long list of security issues associated with OpenClaw has prompted China’s Ministry of Industry and Information Technology to issue an alert about misconfigured instances, urging users to implement protections to secure against cyber attacks and data breaches, Reuters reported.

“When agent platforms go viral faster than security practices mature, misconfiguration becomes the primary attack surface,” Ensar Seker, CISO at SOCRadar, told The Hacker News via email. “The risk isn’t the agent itself; it’s exposing autonomous tooling to public networks without hardened identity, access control, and execution boundaries.”

“What’s notable here is that the Chinese regulator is explicitly calling out configuration risk rather than banning the technology. That aligns with what defenders already know: agent frameworks amplify both productivity and blast radius. A single exposed endpoint or overly permissive plugin can turn an AI agent into an unintentional automation layer for attackers.”