Josh Bongard interview: The roboticist who wants to bring AI into contact with the real world

WHO’S in charge, your brain or your body? The answer may seem obvious, but there is plenty of evidence to suggest that

WHO’S in charge, your brain or your body? The answer may seem obvious, but there is plenty of evidence to suggest that our physiology has dramatically affected the way we think. This idea of embodied cognition could hold important lessons for those trying to build genuinely intelligent machines – artificial intelligences that learn and think and can generalise their knowledge to all manner of tasks, like humans.

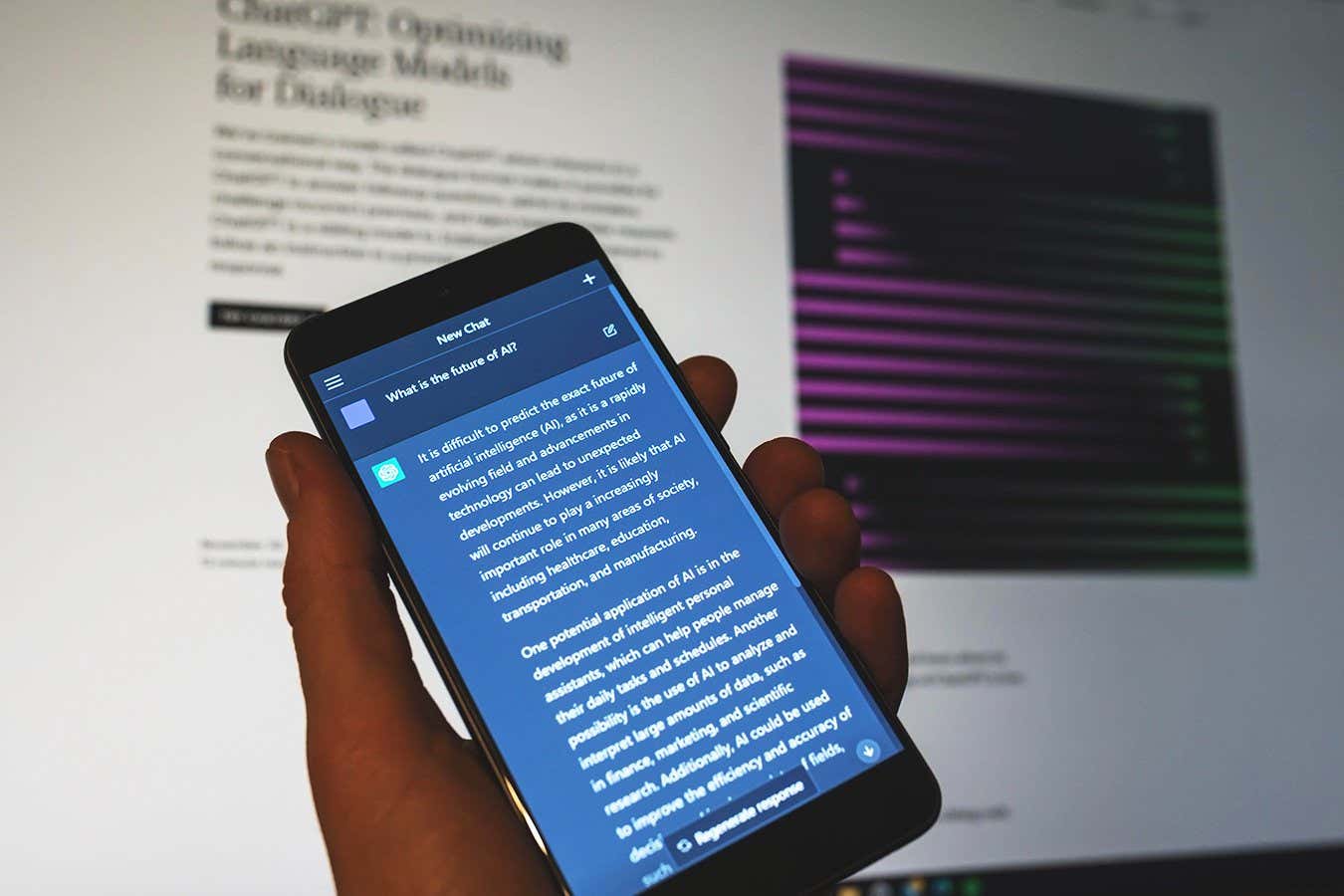

Josh Bongard, a roboticist at the University of Vermont, is among those who insist AIs will only fulfil their promise if they can directly experience and interact with the physical world. That is a far cry from AIs like ChatGPT, whose only interactions with the world come via the abstract medium of language. But the field of embodied AI is pushing for the convergence of artificial intelligence and robotics, and Bongard is at its forefront.

He reckons we need to rethink our approach to both disciplines. Simply integrating an AI chatbot with a robotic arm, as Google has done with its PaLM-E system (pictured below), may not be enough. Instead, Bongard focuses on “evolutionary robotics”, which leverages the principles of natural selection to rapidly iterate through robot designs, many of them made of soft materials. He is also part of a team using living cells to form simple biological robots, known as xenobots, that not only perform basic tasks, but can interact with and respond to their environment too.

Here, Bongard tells New Scientist how this work is suggesting entirely new ways to think about embodied cognition, and what counts as a robot, which could transform our approach to building intelligent machines.…