How not to misread science fiction

Not so futuristic: Saruman with a palantir in The Lord of the Rings LANDMARK MEDIA/Alamy We are approaching the

Not so futuristic: Saruman with a palantir in The Lord of the Rings

LANDMARK MEDIA/Alamy

We are approaching the Gregorian New Year, and it’s a great time to ponder what’s coming next. Are we about to use CRISPR to grow wings? Will we all be uploading our brains to the Amazon cloud? Should we wrap the sun in a Dyson sphere? If, like me, you are a nerd who loves science and engineering, sci-fi is the place you turn to imagine the answers. The problem is that most people are getting the wrong messages from these visions of tomorrow.

As a science journalist who also writes science fiction, I am giving you an end-of-year present: a quick guide to not misreading sci-fi stories. Pay attention, because all our civilisations depend on it.

There are two main ways that people misread sci-fi. Let’s start with the simpler one, known as the Torment Nexus Problem. It appears most often in tech conferences and business plans, and gets its name from an iconic social media post by the satirist Alex Blechman. In 2021, he wrote:

“Sci-Fi Author: In my book I invented the Torment Nexus as a cautionary tale

Tech Company: At long last, we have created the Torment Nexus from classic sci-fi novel Don’t Create The Torment Nexus”.

You get the idea. The Torment Nexus Problem crops up when people read, watch or play a sci-fi story and focus on its futuristic tech without paying attention to the actual point of the story.

As a result, you get billionaire Peter Thiel co-founding a company that specialises in data and surveillance called Palantir, named after the fantasy tech of the “seeing stones” in The Lord of the Rings that drive their users to evil and madness. Palantir’s products have been used by the Israel Defense Forces to strike targets in Gaza. Earlier this year, the firm signed a contract with the US government to build a system for tracking the movements of certain migrants. J. R. R. Tolkien would not be amused.

There are less disturbing examples as well. When Mark Zuckerberg decided to pivot Facebook to virtual reality, he renamed it Meta, after the metaverse in Neal Stephenson’s Snow Crash. But this fictional metaverse isn’t something you would want to emulate, if you paid attention when reading the story. It’s a hostile corporate space that unleashes a mind virus that causes people’s brains to “crash” like computers.

“

Zuckerberg and Thiel overlooked the fact that a palantir and the metaverse destroy people’s minds

“

You might be sensing a theme here. Thiel and Zuckerberg wanted to make fictional tech real and appear to have overlooked the fact that a palantir and the metaverse destroy people’s minds. That’s a profound misreading of sci-fi.

The second major way people misread science fiction could be called the Blueprint Problem. Essentially, it’s the mistaken idea that sci-fi provides an exact model for what is coming next and if we replicate what happens in sci-fi, we will arrive in a glorious future.

The Blueprint Problem inspired a lot of early space programmes in the 1950s, which prioritised putting humans into space rather than exploring it remotely with robotic spacecraft. Generations of people had watched Flash Gordon and read Edgar Rice Burroughs, and had been promised that people would fly spaceships to colonise alien worlds. Today, we have robots discovering incredible things on Mars and space probes grabbing chunks of asteroids for analysis. But the media are still more likely to make a fuss over Katy Perry riding in Jeff Bezos’s rocket than to celebrate when the autonomous Voyager spacecrafts hit the termination shock that marks the edge of our solar system.

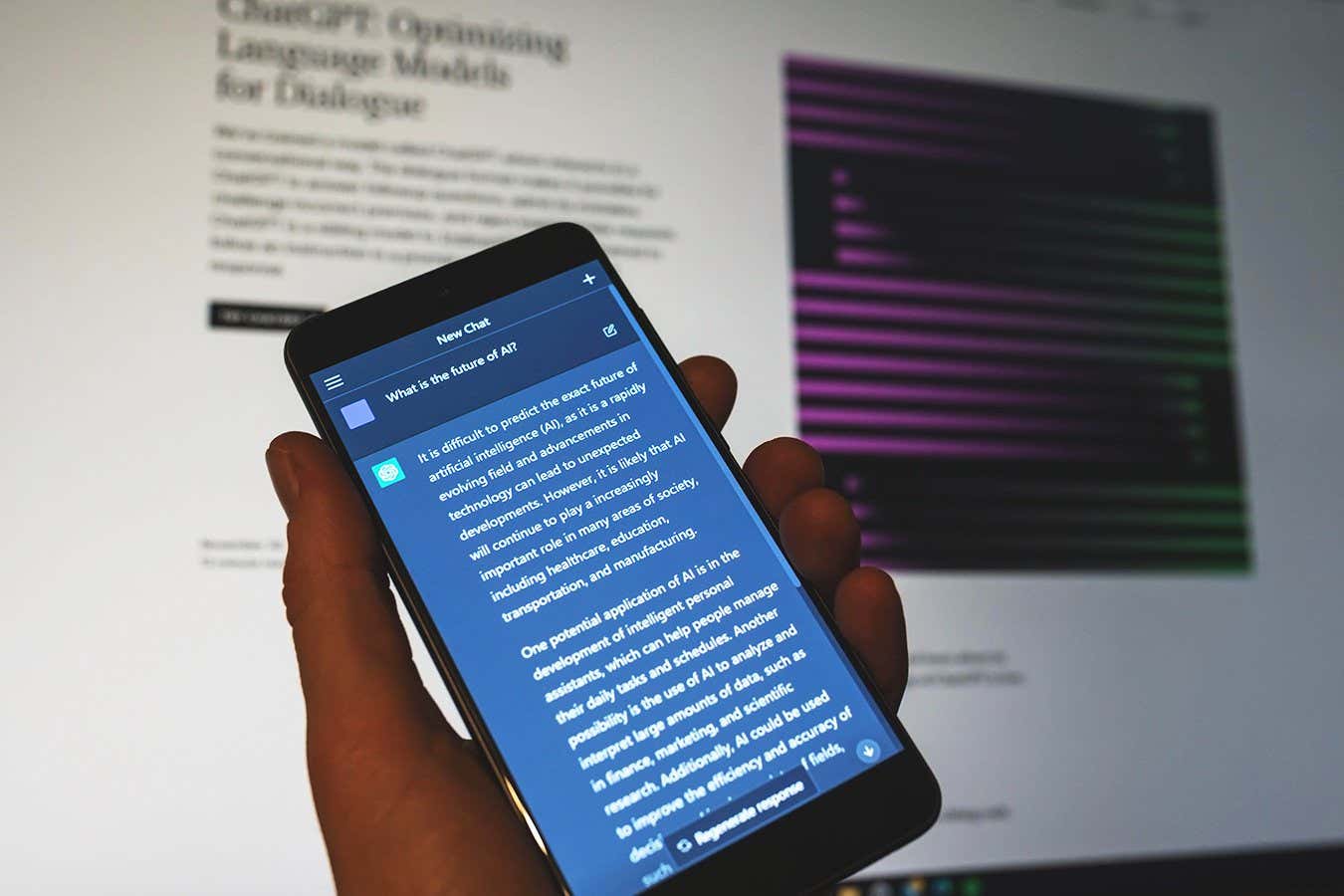

Most of the hype around AI products can also be blamed on the Blueprint Problem. We were promised AI servants and savants in so much sci-fi over the past century that robocops and holographic doctors have come to feel inevitable. But they aren’t.

Science fiction isn’t a map, a recipe book or a prescription. Instead, it is a world view, a way of approaching problems with the underlying assumption that things don’t have to be the way they are. This conviction inspired the book We Will Rise Again, a sci-fi anthology about social change that I co-edited with Karen Lord and Malka Older. We collected stories and essays intended to dislodge people’s preconceptions about where human civilisations are headed. In our book, the future isn’t predestined; it’s a process, and people are actively shaping it.

The more you appreciate this process, the weirder the present-day world starts to seem. Why do we build machines to fold tissues into boxes? Why do we believe in invisible lines called borders? Why do we assume there are only two immutable genders? Asking those kinds of questions is the real point of science fiction. They are the gateways to new worlds.

If you want to build a better future, you cannot merely replicate something you read. You must imagine it yourself.

Annalee Newitz is a science journalist and author. Their latest book is Automatic Noodle. They are the co-host of the Hugo-winning podcast Our Opinions Are Correct. You can follow them @annaleen and their website is techsploitation.com

What I’m reading

404 Media, an incredible online publication for investigative journalism about tech.

What I’m watching

Heated Rivalry, a gay ice hockey romance series that is extremely Canadian.

What I’m working on

Planning the European tour for sci-fi anthology We Will Rise Again.

Topics: